30 How To Scrape Javascript Content

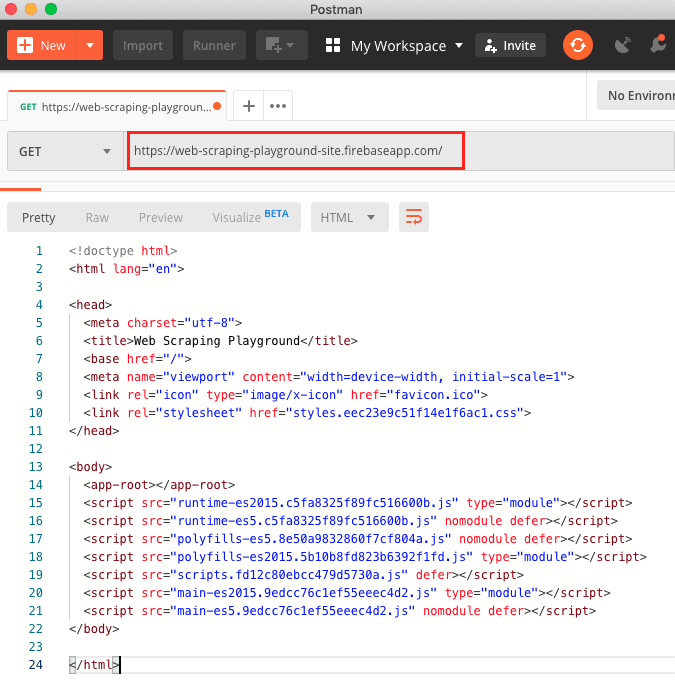

Demo of the Render() functionHow we can use requests-html to render webpages for us quickly and easily enabling us to scrape the data from javascript dynamic... You need to have a browser environment in order to execute Javascript code that will render HTML. If you will try open this website (https://web-scraping-playground-site.firebaseapp ) in your browser — you will see a simple page with some content.

How To Scrape Javascript Content From Any Website Parsehub

How To Scrape Javascript Content From Any Website Parsehub

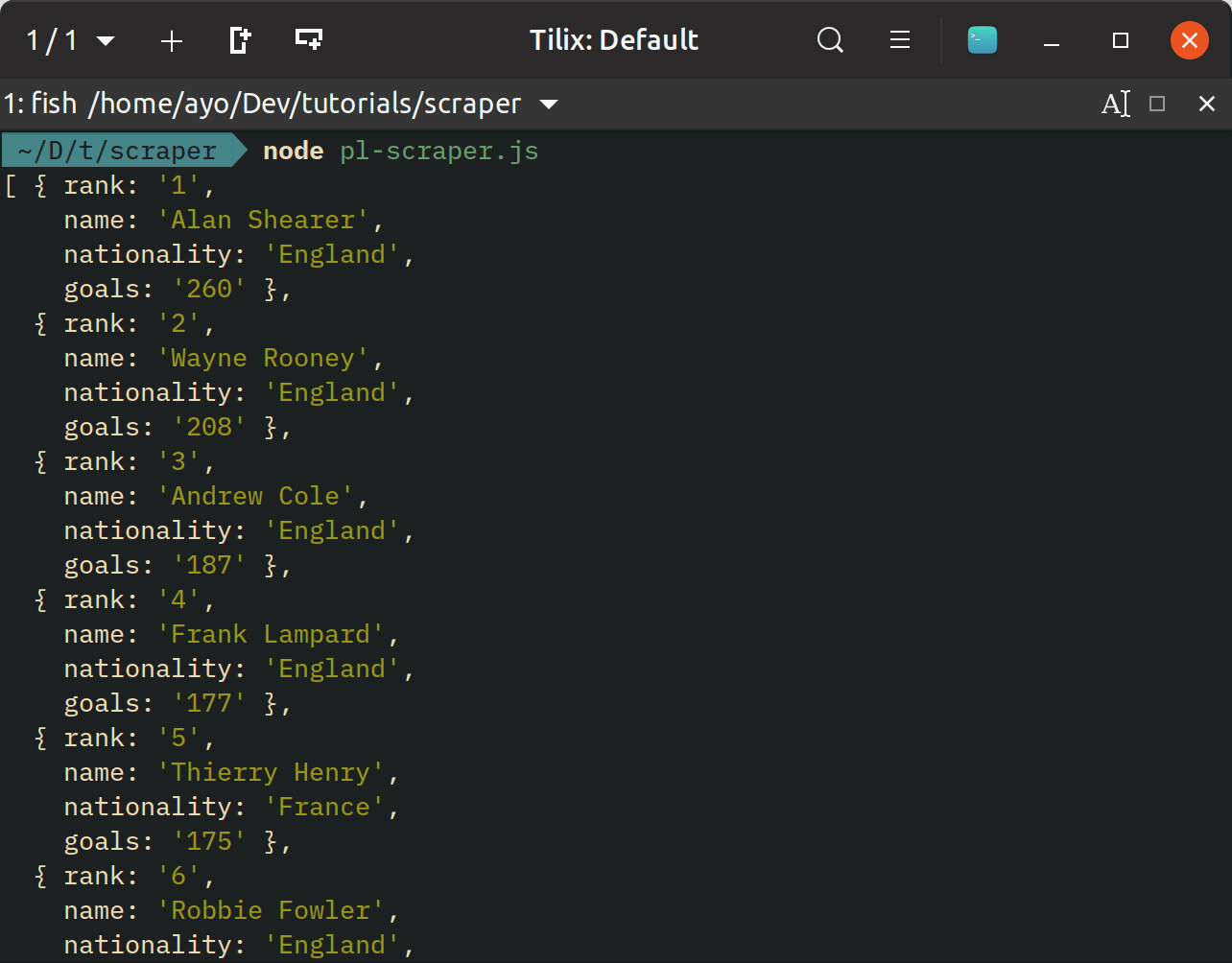

RUNNING THE SCRAPER. Running the scraper is as easy as navigating to your project directory from the terminal and running npm start . npm start runs node index . - the command we specify in the script section of package.json . You could as well run node index directly in a terminal/console. CONCLUSION

How to scrape javascript content. When read into PhantomJS, the scrape.js file will do the following: Get the HTML of the URL you provide it with (in this case ‘https://www.xx.co.uk/xx’) Write the HTML and JavaScript code to a file named ‘website_phantom.html’, which will appear in the \phantomjs\bin folder; … Unless you can analyze the javascript or intercept the data it uses, you will need to execute the code as a browser would. In the past I ran into the same issue, I utilized selenium and PhantomJS to render the page. After it renders the page, I would use the WebDriver client to navigate the DOM and retrieve the content I needed, post AJAX. If there's content you can see in your browser, there's HTML there. You don't need special tools to scrape JavaScript pages (other than the tools necessary to execute the JavaScript, or trigger it to execute) just like you don't need special tools to scrape.aspx pages and PHP pages.

If your page have javascript, Try this- reclosedevDec 18 '11 at 6:36 3 Try on some Firefoxextensions like httpFoxor liveHttpHeadersand load a page which is using ajax request. Scrapy does not automatically identify the ajax requests, you have to manually search for the appropriate ajax URL and then do request with that. This means if we try just scraping the HTML, the JavaScript won't be executed, and thus, we won't see the tags containing the expiration dates. This brings us to requests_html. Using requests_html to render JavaScript. Now, let's use requests_html to run the JavaScript code in order to render the HTML we're looking for. Scraping JavaScript protected content. Here we come to one new milestone: the JavaScript-driven or JS-rendered websites scrape. Recently a friend of mine got stumped as he was trying to get content of a website using PHP simplehtmldom library. He was failing to do it and finally found out the site was being saturated with JavaScript code.

Thanks to Node.js, JavaScript is a great language to u se for a web scraper: not only is Node fast, but you'll likely end up using a lot of the same methods you're used to from querying the ... If you are posed with scraping a website like this, you will need to use Puppeteer. What Puppeteer does is that it controls Chrome to visit the website, trigger the JavaScript events that will load content, and then when content is loaded, you can then scrape the required data out. There is a lot you can do with Puppeteer. For years I have been reaching out to Web Scraping in order to download / scrape web content, however only recently have I really wanted to dive deep into the subject to really be aware of all the techniques out there. Ranging from the simple Excel "From Web" feature to simulating browser interaction - there are tons of ways to get the ...

Using Selenium with geckodriver is a quick way to scrape the web pages that are using javascript but there are a few drawbacks. 12/10/2020 · Get ParseHub for free: https://bit.ly/3cSeexQScraping Javascript content can be quite a challenge.The reason for this is that a lot of web scrapers struggle ... Browsing and Scraping Javascript Content Now, let's get started with our project. Install and open ParseHub. Click on "New Project" and enter the URL you will be scraping data from.

Javascript, Scraping, Web Automation. More From Medium. Solutions to Common JavaScript Date Formatting Tasks. John Au-Yeung in JavaScript in Plain English. Web scraping JavaScript content. Brian Scally Data processing January 13, 2019 January 23, 2019 5 Minutes. Introduction. Web scraping is an extremely powerful method for obtaining data that is hosted on the web. In its simplest form, web scraping involves accessing the HTML code (the foundational programming language on which websites are built ... Download the response data with cURL. Write a Node.js script to scrape multiple pages. Case 2 - Server-side Rendered HTML. Find the HTML with the data. Write a Node.js script to scrape the page. Case 3 - JavaScript Rendered HTML. Write a Node.js script to scrape the page after running JavaScript. That's a wrap.

Web Scraping, which is an essential part of Getting Data, used to be a very straightforward process just by locating the html content with xpath or css selector and extracting the data until Web developers started inserting Javascript-rendered content in the web page. Short tutorial on scraping Javascript generated data with R using PhantomJS. When you need to do web scraping, you would normally make use of Hadley Wickham's rvest package. This package provides an easy to use, out of the box solution to fetch the html code that generates a webpage. Rendering JavaScript Pages. Voilà! A list of the names and birthdays of all 45 U.S. presidents. Using just the request-promise module and Cheerio.js should allow you to scrape the vast majority of sites on the internet. Recently, however, many sites have begun using JavaScript to generate dynamic content on their websites.

Learn web scraping with Javascript and NodeJS with this step-by-step tutorial. We will see the different ways to scrape the web in Javascript through lots of example. Javascript has become one of the most popular and widely used languages due to the massive improvements it has seen and the introduction of the runtime known as NodeJS. Scraping javascript website content. There is now, only one part missing which is extracting the actual content from the html. This can be done in many different ways, and it depends on the language you are using to code your application. We always suggest using one of the many available libraries that are out there. To scrape content from a static page, we use BeautifulSoup as our package for scraping, and it works flawlessly for static pages. We use requests to load page into our python script. Now, if the page we are trying to load is dynamic in nature and we request this page by requests library, it would send the JS code to be executed locally.

This allows us to scrape content from a URL after it has been rendered as it would in a standard browser. Before beginning you'll need to have Node.js installed. Let's get started by creating a project folder, initialising the project and installing the required dependencies by running the following commands in a terminal: mkdir scraper cd ... If you want to scrape all the data. Firstly you should find out about the total count of sellers. Then you should loop through pages by passing in incremental page numbers using payload to URL. Below is the full code that I used to scrape and I loop through the first 50 pages to get content on those pages. In the Web Page Scraping with jsoup article I described how to extract data from a web page using the open-source jsoup Java library. As an HTML parser, jsoup only sees the raw page source and is completely unaware of any content that is added to the DOM via JavaScript after the initial page load.

6/12/2016 · When you want to scrape javascript generated content from a website you will realize that Scrapy or other web scraping libraries cannot run javascript code while scraping. First, you should try to find a way to make the data visible without executing any javascript code. If you can’t you have to use a headless or lightweight browser. Welcome to part 4 of the web scraping with Beautiful Soup 4 tutorial mini-series. Here, we're going to discuss how to parse dynamically updated data via javascript. Many websites will supply data that is dynamically loaded via javascript. In Python, you can make use of jinja templating and do this without javascript, but many websites use ...

How To Scrape Dynamic Web Pages Rendered With Javascript Agenty

How To Scrape Dynamic Web Pages Rendered With Javascript Agenty

Web Scraping In Python Python Scrapy Tutorial

Web Scraping In Python Python Scrapy Tutorial

The Ultimate Guide To Web Scraping With Node Js

The Ultimate Guide To Web Scraping With Node Js

Web Scraping Techniques Apify Documentation

Web Scraping Techniques Apify Documentation

Web Scraping Javascript Page With Python Stack Overflow

Web Scraping Javascript Page With Python Stack Overflow

How To Scrape Javascript Content From Any Website Parsehub

How To Scrape Javascript Content From Any Website Parsehub

How To Scrape Javascript Content From Any Website Parsehub

How To Scrape Javascript Content From Any Website Parsehub

Scraping Data From Javascript Web Sites With Power Query

Scraping Data From Javascript Web Sites With Power Query

How To Scrape Javascript Content From Any Website 2020 Tutorial

How To Scrape Javascript Content From Any Website 2020 Tutorial

Web Scraping Best Practices Scraperapi Cheat Sheet Scraperapi

Web Scraping Best Practices Scraperapi Cheat Sheet Scraperapi

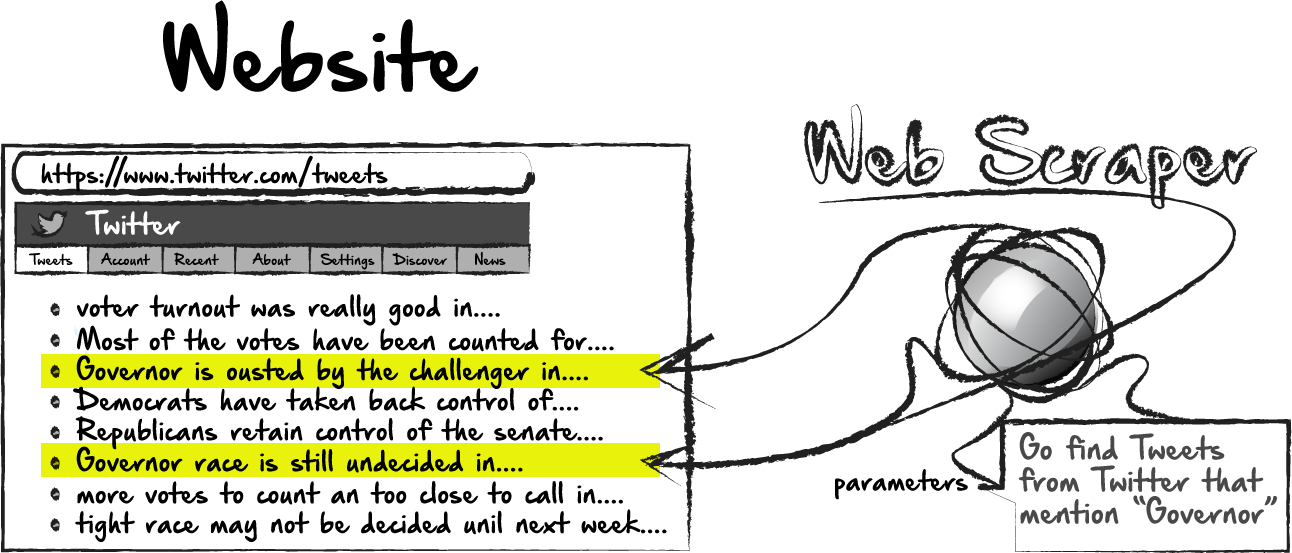

What Is Content Scraping Web Scraping Cloudflare

What Is Content Scraping Web Scraping Cloudflare

How To Scrape Websites With Node Js By John Au Yeung

How To Scrape Websites With Node Js By John Au Yeung

Best Open Source Javascript Web Scraping Tools And Frameworks

Best Open Source Javascript Web Scraping Tools And Frameworks

Web Scraping How To Access Content Rendered In Javascript

Web Scraping How To Access Content Rendered In Javascript

The Simple Way To Scrape An Html Table Google Docs

The Simple Way To Scrape An Html Table Google Docs

Scraping Tables From A Javascript Webpage Using Selenium

Scraping Tables From A Javascript Webpage Using Selenium

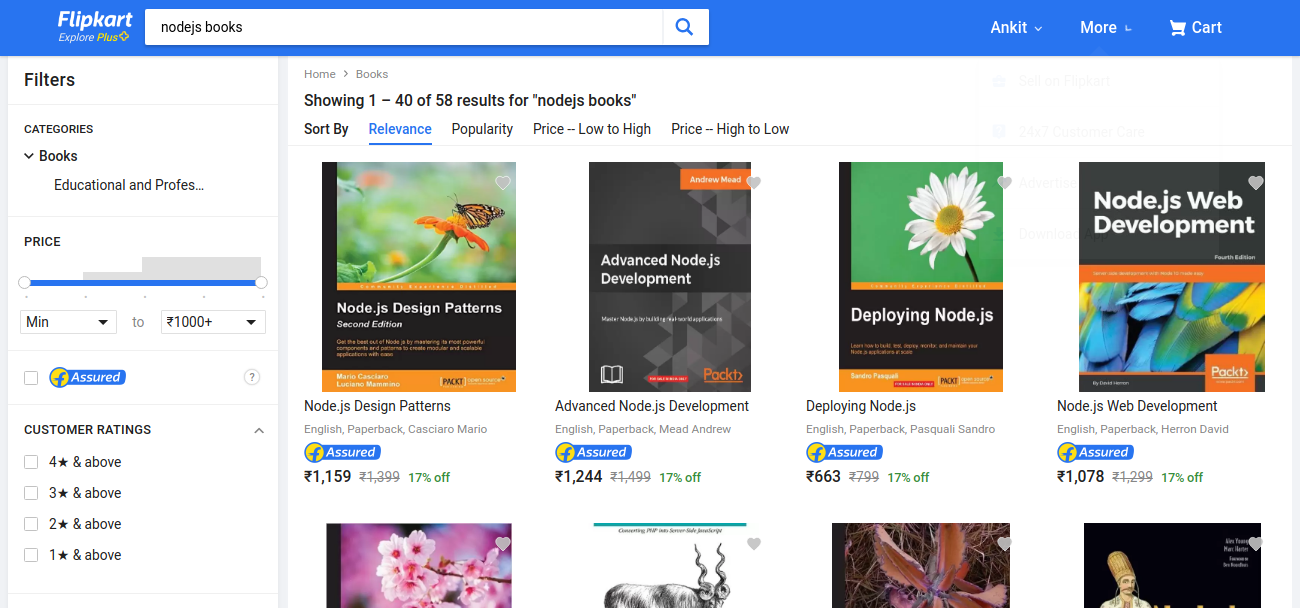

How To Perform Web Scraping Using Node Js Part 2 By Ankit

How To Perform Web Scraping Using Node Js Part 2 By Ankit

How To Build A Web Scraper With Javascript And Node Js Geosurf

How To Build A Web Scraper With Javascript And Node Js Geosurf

The Data School Web Scraping Javascript Content

The Data School Web Scraping Javascript Content

Building A Web Scraper From Start To Finish Hacker Noon

Building A Web Scraper From Start To Finish Hacker Noon

4 Tools For Web Scraping In Node Js

4 Tools For Web Scraping In Node Js

Scraping Data From Javascript Web Sites With Power Query

Scraping Data From Javascript Web Sites With Power Query

Scraping Dynamic Websites Using Scraper Api And Python Learn

Web Scraping Using Python Datacamp

How To Scrape Html From A Website Built With Javascript

How To Scrape Html From A Website Built With Javascript

Web Scraping Javascript Tags In Python Python In Plain English

Web Scraping Javascript Tags In Python Python In Plain English

0 Response to "30 How To Scrape Javascript Content"

Post a Comment