28 How To Make A Web Crawler Javascript

An easy to use, powerful crawler implemented in PHP. Can execute Javascript. - GitHub - spatie/crawler: An easy to use, powerful crawler implemented in PHP. Can execute Javascript. Web Crawler/Spider for NodeJS + server-side jQuery ;-) - GitHub - bda-research/node-crawler: Web Crawler/Spider for NodeJS + server-side jQuery ;-)

How To Make A Web Crawler In Javascript Node Js

May 22, 2017 - It is a javascript web crawler written under 1000 lines of code. This should put you on the right track. ... You can make a web crawler driven from a remote json file that opens all links from a page in new tabs as soon as each tab loads except ones that have already been opened.

How to make a web crawler javascript. In addition, some web pages are all rendered through Javascript like using AngularJS, your crawler may not be able to get any content at all. I would say there's no silver bullet that can make a perfect and robust crawler for all web pages. You need tons of robustness tests to make sure that it can work as expected. First of all, JavaScript crawling is slower and more intensive for the server, as all resources (whether JavaScript, CSS, images etc.) need to be fetched to render each web page. This won't be an issue for smaller websites, but for a large website with many thousands or more pages, this can make a huge difference. This web crawler app can analyze your website and make your website searchable by a search engine. This lists crawler app provides you a list of pages with issues that could affect your website. You can increase Google ranking effortlessly. This web crawler online offers real time visual image of a responsive website. Link: https://cocoscan.io/

Since web administrators will generally automatically treat Selenium-powered web crawlers as threats, you need to protect your web crawler. Nobody can guarantee that your web scraper will never get blacklisted, but choosing the right proxy can make a big difference and improve the life expectancy of your crawler. Here are the basic steps to build a crawler: Step 1: Add one or several URLs to be visited. Step 2: Pop a link from the URLs to be visited and add it to the Visited URLs thread. Step 3: Fetch the page's content and scrape the data you're interested in with the ScrapingBot API. Step 4: Parse all the URLs present on the page, and add them to ... My original how-to article on making a web crawler in 50 lines of Python 3 was written in 2011. I also wrote a guide on making a web crawler in Node.js / Javascript. Check those out if you're interested in seeing how to do this in another language. If Java is your thing, a book is a great investment, such as the following.

We can build our own Crawler project using the Spatie package, where, as it is the name, will automatically crawl every link that our web has. The package also has callbacks if anything failed or ... A web crawler, also known as a 'spider' has a more generic approach! You can define a web crawler as a bot that systematically scans the Internet for indexing and pulling content/information. It follows internal links on web pages. In general, a "crawler" navigates web pages on its own, at times even without a clearly defined end goal. Feb 11, 2015 - For more advanced crawlers we'll ... node-crawler, and spider. ... If you have any comments or questions, feel free to post them on the source of this page in GitHub. Source on GitHub. Comment on this post ... Gábor who writes the articles of the Code Maven site offers courses in in the subjects that are discussed on this web ...

I disable JavaScript at this point by pressing shift-command-P, entering javascript and selecting the Disable JavaScript option. Remember to refresh the page by clicking the refresh button or pressing command-R. This step is crucial for making decisions about creating the web crawler as this allows me to see the page as Scrapy will see it. If a website makes heavy use of JavaScript to operate, it's more likely WebCopy will not be able to make a true copy. Chances are, it will not correctly handle dynamic website layouts due to the heavy use of JavaScript. 9. HTTrack. As a website crawler freeware, HTTrack provides functions well suited for downloading an entire website to your PC ... For complex websites that use React, Vue or other front-end javascript libraries and require JavaScript execution, spawn a headless browser with PlaywrightCrawler or PuppeteerCrawler ... All the crawlers are automatically scaled based on available system resources using the AutoscaledPool class.

Be well aware that it might slow down the web crawler, you would however make better crawlers. We will be using only the waitUntil option and we will be setting it to networkidle0 so as to make sure the page.goto function resolves when all the network connections have been settled. Thanks to Node.js, JavaScript is a great language to u se for a web scraper: not only is Node fast, but you'll likely end up using a lot of the same methods you're used to from querying the ... As you can see above, with JavaScript rendering disabled, crawlers can’t process a website’s code or content and, therefore, the crawled data is useless. How to start crawling JavaScript? The simplest possible way to start with JavaScript crawling is by using Screaming Frog SEO Spider .

Web scraping using Python involves three main steps: 1. Send an HTTP request to the URL of the webpage. It responds to your request by returning the content of web pages. 2. Parse the webpage. A parser will create a tree structure of the HTML as the webpages are intertwined and nested together. This is a tutorial made by Matt Hacklings about web scraping and building a crawler using JavaScript, Phantom.js, Node.js, Ajax. This include codes for creating a JavaScript crawler function and the implementation of limits on the maximum number of concurrent browser sessions performing the ... Creating the web crawler in JavaScript. Let's remind ourselves what we're trying to do: 1) Get a web page. 2) Try to find a word on a given web page. 3) If the word isn't found, collect all the links on that page so we can start visiting them. Let's start coding each of those steps up. I'll be using Atom as my text editor to write the ...

5/11/2015 · Go ahead and create an empty file we'll call crawler.js and add these three lines: var request = require ('request'); var cheerio = require ('cheerio'); var URL = require ('url-parse'); In Atom it looks like this: These are the three libraries in this web crawler that we'll use. Request is used to make HTTP requests. In this video we will look at Python Scrapy and how to create a spider to crawl websites to scrape and structure data.Download Kite free:https://kite /dow... Simple web crawler in java source code Problem: I'm working on a project which needs to design a web crawler in Java. I have no idea how to integrate it in a web crawler to extract content from different pages.

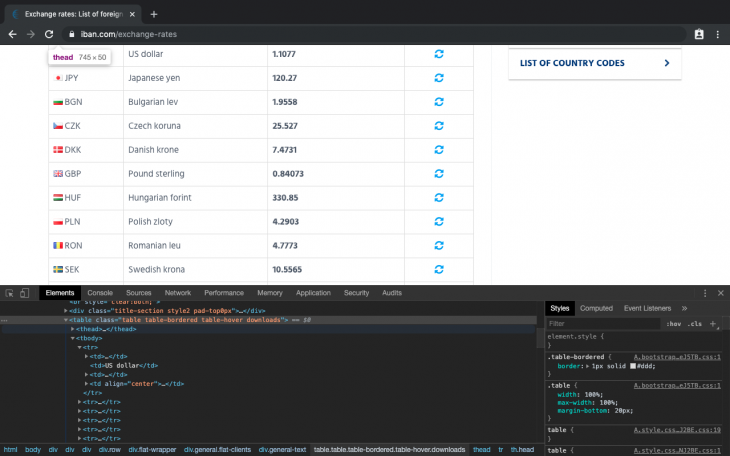

Sep 27, 2020 - From the point I started to learn about web development I was enthusiastic about web crawling. Most of the time it is called “web crawling”, “web scraping” or “web spider”. Going through the web and… Here is the basics of how you bootstrap your web crawler, from scratch. Your web crawler is really just a function. It's input is a set of seed URLs (or entry points), and it's output is a set of HTML pages (or results). Inside this web crawler ... Let's build a basic web crawler that uses Node workers to crawl and write to a database. The crawler will complete its task in the following order: Fetch (request) HTML from the website. Extract the HTML from the response. Traverse the DOM and extract the table containing exchange rates. Format table elements ( tbody, tr, and td) and extract ...

A Web Crawler is a program that navigates the Web and finds new or updated pages for indexing. The Crawler starts with seed websites or a wide range of popular URLs (also known as the frontier) and searches in depth and width for hyperlinks to extract.. A Web Crawler must be kind and robust. Kindness for a Crawler means that it respects the rules set by the robots.txt and avoids visiting a ... Jun 02, 2019 - So what’s web scraping anyway? It involves automating away the laborious task of collecting information from websites. There are a lot of use cases for web scraping: you might want to collect prices from various e-commerce sites for a price comparison site. Or perhaps you need flight times and BeautifulSoup — The nifty utility tool I used to build my web crawler. Web Scraping with Python — A useful guide to learning how web scraping with Python works. Lean Startup - I learned about rapid prototyping and creating an MVP to test an idea from this book. I think the ideas in here are applicable across many different fields and also ...

Learn web scraping with Javascript and NodeJS with this step-by-step tutorial. We will see the different ways to scrape the web in Javascript through lots of example. Javascript has become one of the most popular and widely used languages due to the massive improvements it has seen and the introduction of the runtime known as NodeJS. 21/2/2019 · Activate JavaScript Crawling. JavaScript crawling can be enabled in the project settings by account owners. Just click on "Enable JavaScript crawling". Figure 2: Enable JavaScript Crawling in your project settings. Crawler Settings. We have made sure that the most important settings are available even with JavaScript crawling. Sep 06, 2018 - The crawler provides intuitive interface to crawl links on web sites. Example: ... The call to configure is optional, if it is omitted the default option values will be used. onSuccess callback will be called for each page that the crawler has crawled. page value passed to the callback will ...

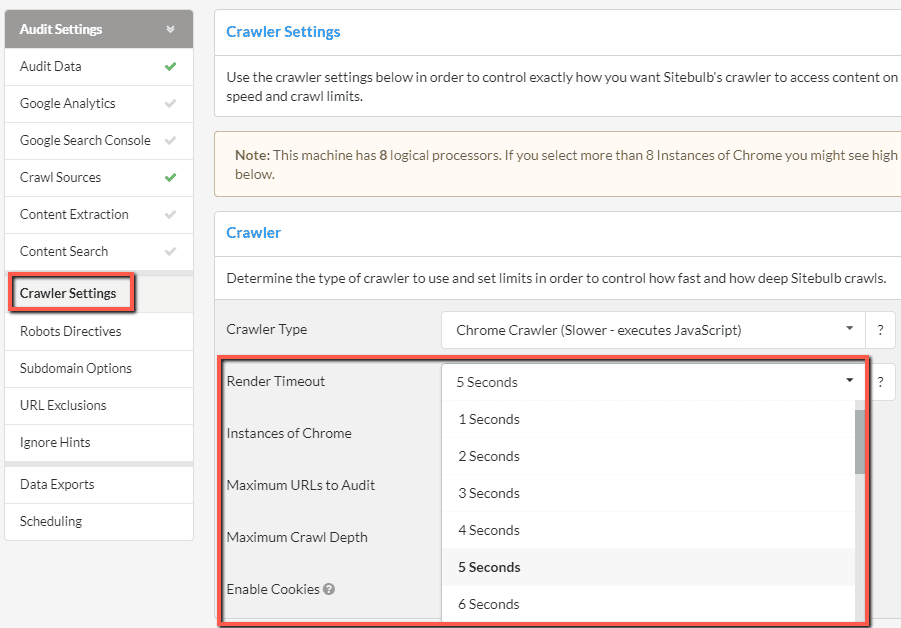

25/8/2021 · Selecting the Chrome Crawler in the crawler settings will allow you to crawl JavaScript websites. Trying to crawl a JavaScript website without rendering. As a brief aside, we're first going to investigate what happens when you try to crawl a JavaScript website without rendering, which means selecting the 'HTML Crawler' in the settings. I wrote a quick web crawler (spider) to look for regex keywords in pages given any URL Nov 15, 2020 - Building a simple web crawler with Node.js and JavaScript · We will be using the modules cheerio and request. Install these dependencies using the following commands · npm install --save cheerio npm install --save request · The following code imports the required modules and makes a request ...

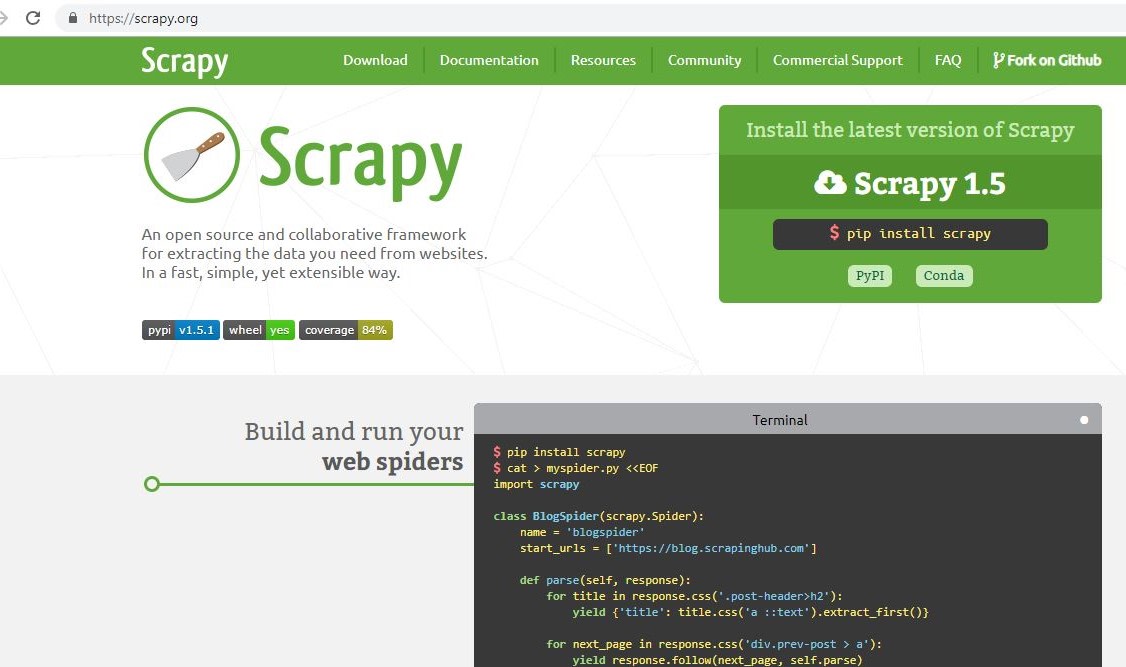

Open Source Web Crawler in Python: 1. Scrapy : Language : Python. Github star : 28660. Support. Description : Scrapy is a fast high-level web crawling and web scraping framework, used to crawl websites and extract structured data from their pages. It can be used for a wide range of purposes, from data mining to monitoring and automated testing. Dec 17, 2018 - A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions. A quick-fire guide on how to crawl ....uk/crawl-javascript-seo/). Setting Up a New Project. Enter target URL to scrape. Parse the content (usually HTML) and extract potential URLs that we want to crawl. The crawler will complete its task in the following order: Fetch (request) HTML from the website. We make a HTML file ...

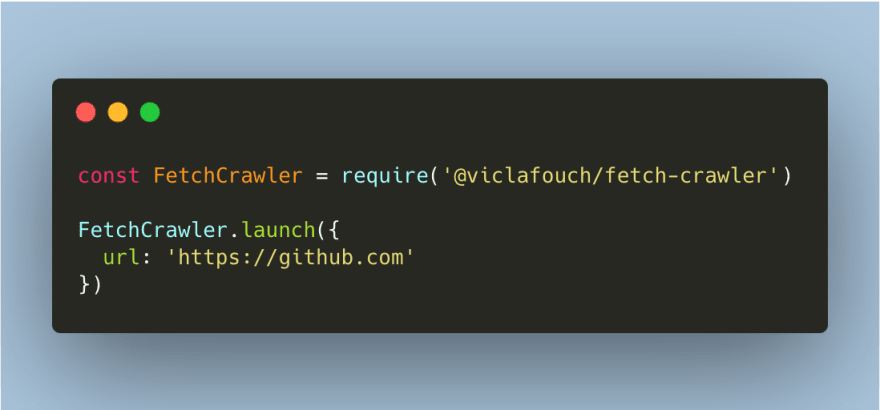

Javascript is a good language for building crawlers as it's async, easy to code and deploy and it allows you to launch several things to crawl without blocking any part of your code. Just keep in mind that if you don't properly write your javascri... Introduction of Fetch-crawler (Node JS) Fetch Crawler is designed to provide a basic, flexible and robust API for crawling websites. The crawler provides simple APIs to crawl static websites with the following features: Distributed crawling; Configure parallel, retry, max requests, time between requests (to avoid being blocked by the website) ...

Javascript Seo How To Crawl Javascript Rich Websites

Javascript Seo How To Crawl Javascript Rich Websites

60 Innovative Website Crawlers For Content Monitoring

60 Innovative Website Crawlers For Content Monitoring

How To Build A Web Crawler Python Tutorial For Beginners

How To Build A Web Crawler Python Tutorial For Beginners

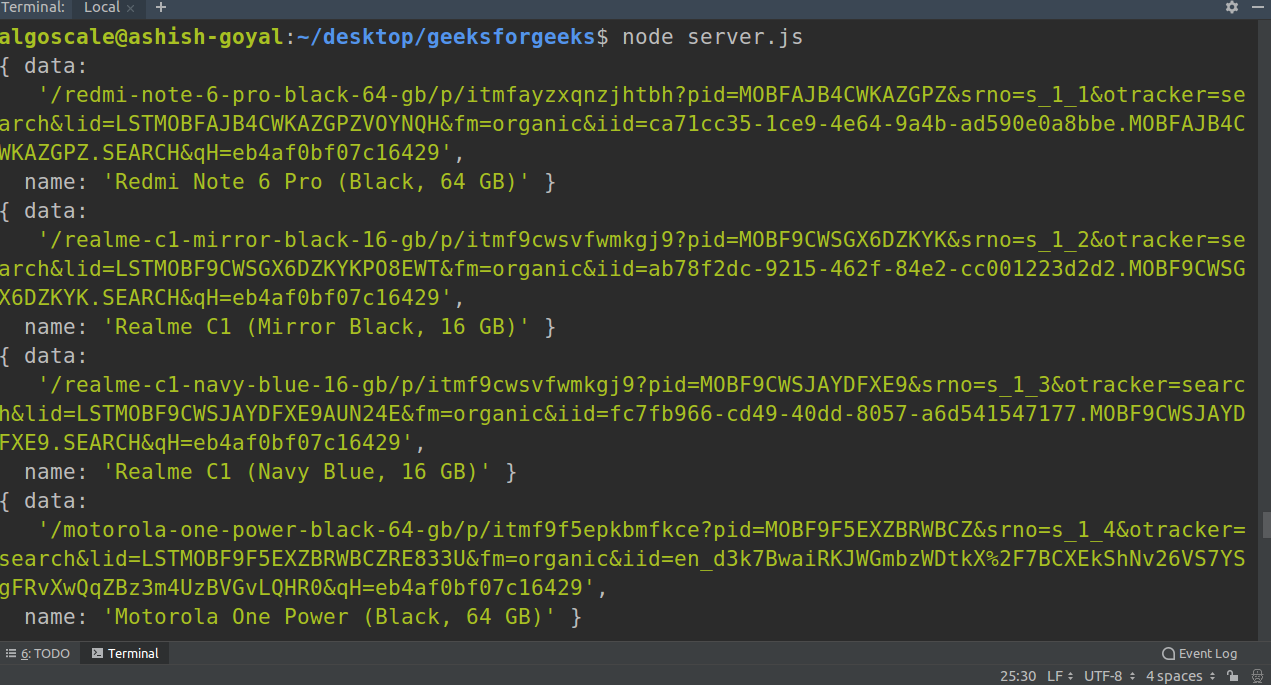

Nodejs Web Crawling Using Cheerio Geeksforgeeks

Nodejs Web Crawling Using Cheerio Geeksforgeeks

How Well Does Google Crawl Javascript Pretty Darn Well

How To Crawl Javascript Websites Sitebulb Com

How To Crawl Javascript Websites Sitebulb Com

Beautiful Soup Build A Web Scraper With Python Real Python

Beautiful Soup Build A Web Scraper With Python Real Python

Scaling Up A Serverless Web Crawler And Search Engine Aws

Scaling Up A Serverless Web Crawler And Search Engine Aws

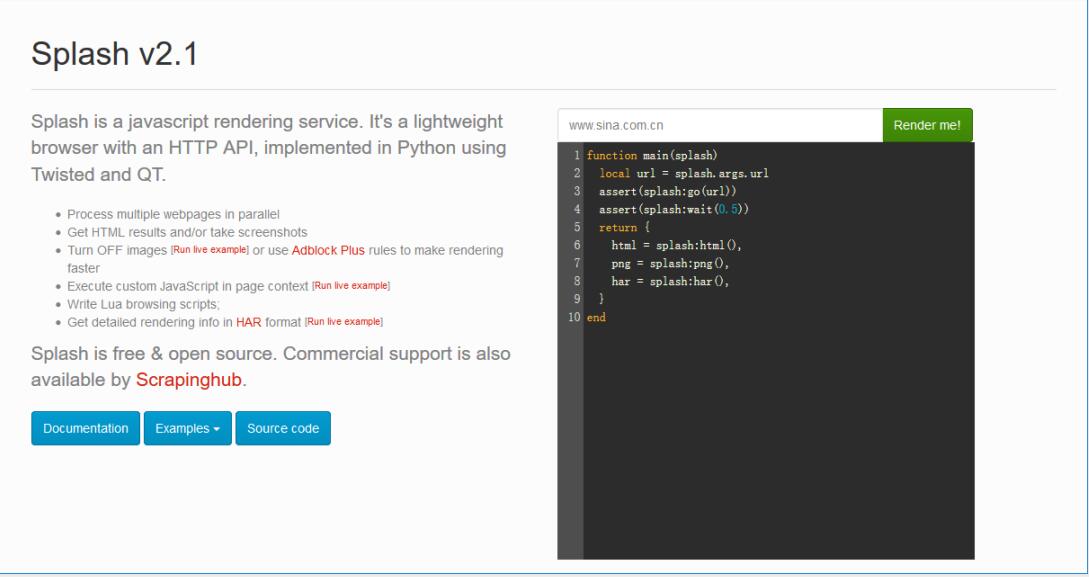

Web Crawler Development Get Started With Splash Ttproxy

Web Crawler Development Get Started With Splash Ttproxy

How To Create An Advanced Website Crawler With Jmeter By

How To Build A Web Crawler Python Tutorial For Beginners

How To Build A Web Crawler Python Tutorial For Beginners

Nodejs Web Crawling Using Cheerio Geeksforgeeks

Nodejs Web Crawling Using Cheerio Geeksforgeeks

How To Crawl Javascript Websites Sitebulb Com

How To Crawl Javascript Websites Sitebulb Com

How To Make A Web Crawler In Javascript Node Js

Configuring Anti Crawler Rules To Prevent Crawler Attacks Web

Configuring Anti Crawler Rules To Prevent Crawler Attacks Web

How To Crawl A Static Website In Javascript In 4min

How To Crawl A Static Website In Javascript In 4min

How To Scrape Websites Using Puppeteer Amp Node Js Tutorial

How To Scrape Websites Using Puppeteer Amp Node Js Tutorial

How Well Does Google Crawl Javascript Pretty Darn Well

How To Crawl A Static Website In Javascript In 4min

How To Crawl A Static Website In Javascript In 4min

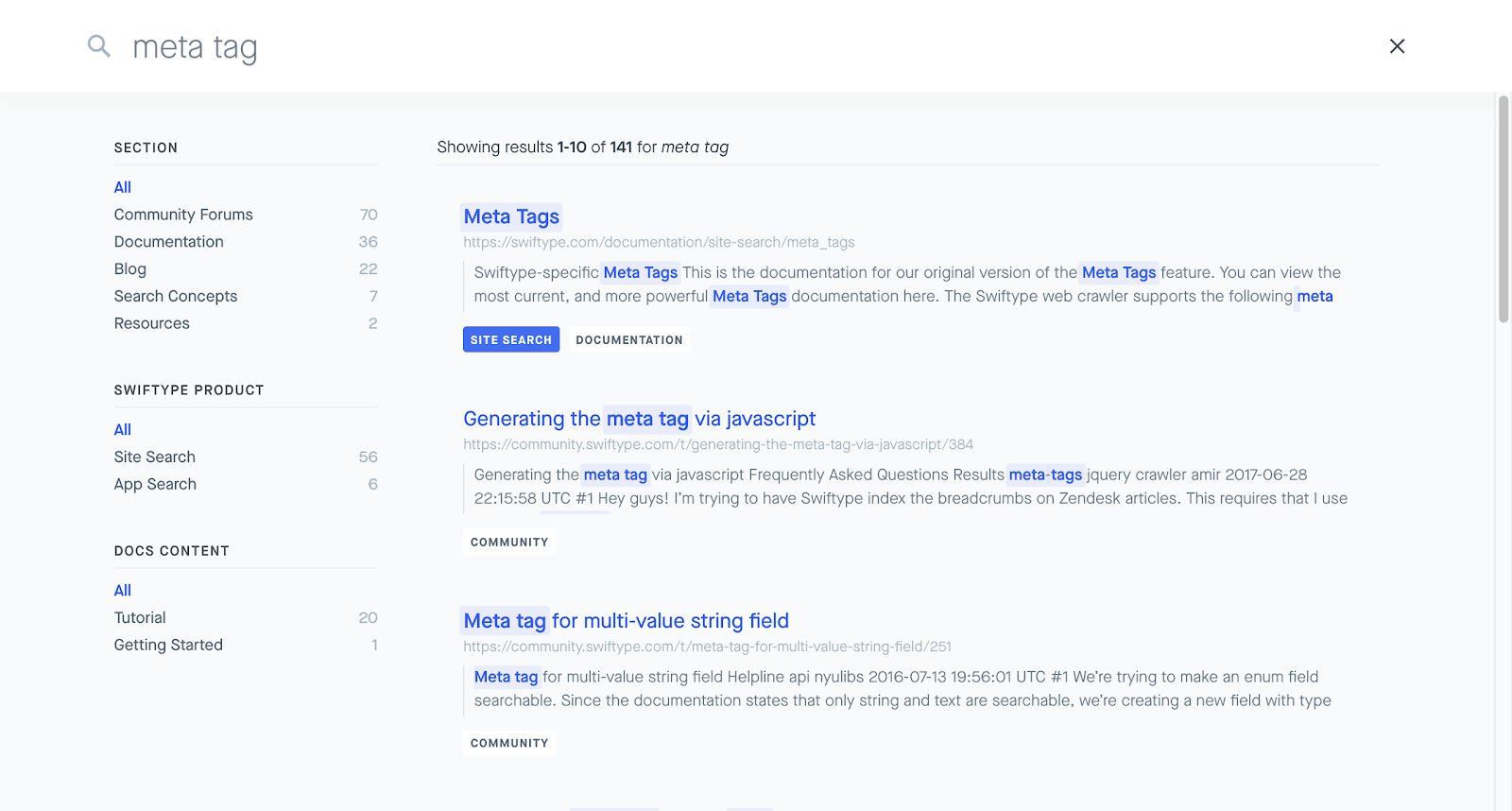

Crawl Code Search Elastic Site Search And Elastic App

Crawl Code Search Elastic Site Search And Elastic App

Web Scraping Javascript Page With Python Stack Overflow

Web Scraping Javascript Page With Python Stack Overflow

Scrapy Python How To Make Web Crawler In Python Datacamp

Scrapy Python How To Make Web Crawler In Python Datacamp

Web Scraping With Javascript And Nodejs

Web Scraping With Javascript And Nodejs

Web Crawler 101 What Is A Web Crawler And How It Works

Web Crawler 101 What Is A Web Crawler And How It Works

Node Js Web Scraping Tutorial Logrocket Blog

Node Js Web Scraping Tutorial Logrocket Blog

Jsoup Basic Web Crawler Example Mkyong Com

0 Response to "28 How To Make A Web Crawler Javascript"

Post a Comment